Community

The Journey of Refactoring Graph Database: Migrating from OrientDB to NebulaGraph

Author: Aqi, a developer at Arch team of SMY Cloud

Aqi is a community member of NebulaGraph who has done system refactoring many times. According to Aqi, migrating from OrientDB to NebulaGraph might be the most difficult one and below he shares the journey of the migration.

The Reason for Migration

OrientDB was plagued by performance bottlenecks and single-point issues. In addition, the legacy system's tech stack couldn't support elastic scaling, and its monitoring and alerting facilities were inadequate. Thus, upgrading to NebulaGraph became a necessity.

I will detail the specific pain points we encountered in a subsequent article, so I won't elaborate further here.

Migration Challenges Identified

- Complexity and contextual vagueness: the system was developed on OrientDB back in 2016, which is quite dated. Plus, we have no clue of the historical backgrounds of the system building.

- An uncharted territory of business: the requests from the Big Data team were passed onto us in a sudden, and we hadn't been involved in this aspect of the business before.

- Unfamiliar technology stack: it was our first touch with graph databases: a concept foreign to the entire team. We had no previous experience with both OrientDB and NebulaGraph, and most of the legacy system was written in Scala. Additionally, it used HBase, Spark, Kafka, all of which were relatively unknown to us.

- Time urgency: we were asked to complete the refactoring within 2 months ;apparently, we were racing against time.

All the challenges above tell that we were in unfamiliar waters with both the business and the technology stack.

The Technical Blueprint

Research Undertaken

Given our unfamiliarity with the business, what research we had done to step in the situation?

- External Interface Review: We examined all external interfaces of the system, which included the interface name, its use, request volume QPS, average consumption time, and caller (services and IP).

- Core Workflow Review: We created an architectural diagram of the legacy system, and flowcharts for the important interfaces (approximately 10).

- Environment Review: We identified the projects that needed modifications, application deployments, MySQL, Redis, HBase cluster IPs, and sorted out the current online deployment branches.

- Trigger Scenarios: We determined how the interfaces were triggered by which business scenario, ensuring each interface was covered by at least one scenario. This would further support future functionality verification.

- Transformation Plan: We carried out feasibility analyses for each interface, planning how to transform them (changing OrientDB statements into NebulaGraph query statements), and how to revamp the graphing (writing process).

- New System Design: We created an architectural diagram and core process diagram for the new system.

Project Target Aligned

Our target was to transition the data source of the graph database from OrientDB to NebulaGraph, refactor the legacy system to a unified Java tech stack, and support horizontal service scalability.

Overall Solution Confirmed

We adopted a fairly aggressive approach as the solution was designed to be a 'once and for all' fix to facilitate future maintenance:

- Rewrite the underlying legacy system (starting at the interface entry point), ensuring the impact was manageable.

- Unify the Java tech stack and integrate with the company's unified service framework to enhance monitoring and maintenance.

- Make clear boundaries of the basic graph database application to simplify future integrations with the graph database for upper-layer applications.

Note: The diagram was created during the research phase; the detailed one won’t be illustrated as it pertains to business specifics.

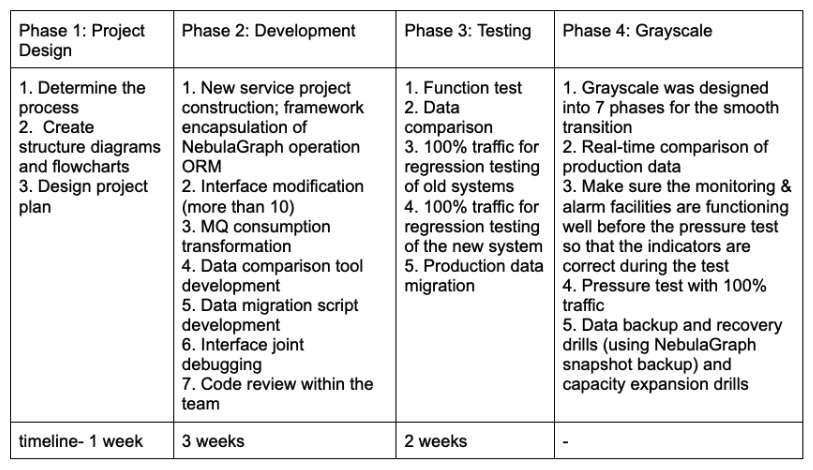

Project Phase Plan

Manpower invested: 4 developers, 1 QA developer

The main items and their duration are as follows:

Grayscale Plan Designed

Overall Strategy

- Write Requests: We employed synchronous dual writing.

- Read Requests: We gradually migrated traffic from smaller to larger volumes, ensuring a smooth transition.

Grayscale Phases

| Phase | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| Volume | 0% | 1‰ | 1% | 10% | 20% | 50% | 100% |

| Number of grayscale days | 2 | 2 | 2 | 5 | 2 | 2 | - |

| Important node description | Synchronous dual writing, traffic playback sampling and comparison until 100% passed; | Pressure test required in this phase |

Notes:

- We used a configuration center switch for control, allowing us to switch back at any moment if issues arose, with recovery in seconds.

- Missed read interfaces had no impact on the project, only changes would have an effect.

- We used parameter hash values as keys to ensure consistency in results for multiple requests with the same parameters. A hit in grayscale was confirmed if abs(key) % 1000 < X (0< X < 1000, X is dynamically configured).

A side note: When refactoring, the most crucial part is the grayscale plan. Our grayscale plan this time could be regarded as a well-designed one: before the volume increase in grayscale phase, we used real-time online traffic to asynchronously compare data. Only after the comparison passed successfully did we proceed with the volume. This comparison phase took much longer than expected (in reality, it took 2 weeks and we discovered many issues).

Data Comparison Plan Settled

Missed grayscale data

The process for missing grayscale is as follows:

We started with the old system to see whether the sample was hit (sample ratio configuration 0% ~ 100%). If the sample was hit, the message queue (MQ) would be sent and dealt with within the new system. The new system interface would be requested and the data returned by the old system would be compared in JSON format. If the data matches, then the comparison would succeed. On the contrary, if the comparison is inconsistent, a notification would be sent in real time.

The same is true in reverse!!

Data Migration Plan Designed

- Full data (historical data): We wrote scripts for full migration: if inconsistency happens during the launch period, the data for the past 3 days which was stored in MQ would be consumed

- Incremental: Synchronous dual-write (there are few write interfaces, and the write request QPS is not high)

Transformation - Taking Subgraph Query as an Example

Before the transformation:

@Override

public MSubGraphReceive getSubGraph(MSubGraphSend subGraphSend) {

logger.info("-----start getSubGraph------(" + subGraphSend.toString() + ")");

MSubGraphReceive r = (MSubGraphReceive) akkaClient.sendMessage(subGraphSend, 30);

logger.info("-----end getSubGraph:");

return r;

}

After the transformation:

Define the gray service module interface

public interface IGrayService {

/**

* Whether to hit the gray service configuration value 0 ~ 1000; true: hit, false: miss

* @param hashCode

* @return

*/

public boolean hit(Integer hashCode);

/**

* Whether to sample; configuration value 0 ~ 100

*

* @return

*/

public boolean hitSample();

/**

* Send request-response data

* @param requestDTO

*/

public void sendReqMsg(MessageRequestDTO requestDTO);

/**

* @param methodKeyEnum

* @return

*/

public boolean hitSample(MethodKeyEnum methodKeyEnum);

}

The interface transformation is as follows, the kgpCoreService sends requests to the new system, the interface business logic remains consistent with old system, and the underlying graph database is changed to query NebulaGraph:

@Override

public MSubGraphReceive getSubGraph(MSubGraphSend subGraphSend) {

logger.info("-----start getSubGraph------(" + subGraphSend.toString() + ")");

long start = System.currentTimeMillis();

//1. Request grayscale

boolean hit = grayService.hit(HashUtils.getHashCode(subGraphSend));

MSubGraphReceive r;

if (hit) {

//2、Hit Grayscale and go through the new process

r = kgpCoreService.getSubGraph(subGraphSend); // Use Dubbo to call new services

} else {

//Here is the original process using akka communication

r = (MSubGraphReceive) akkaClient.sendMessage(subGraphSend, 30);

}

long requestTime = System.currentTimeMillis() - start;

//3.Sampling hits Sending data compared to MQ

if (grayService.hitSample(MethodKeyEnum.getSubGraph_subGraphSend)) {

MessageRequestDTO requestDTO = new MessageRequestDTO.Builder()

.req(JSON.toJSONString(subGraphSend))

.res(JSON.toJSONString(r))

.requestTime(requestTime)

.methodKey(MethodKeyEnum.getSubGraph_subGraphSend)

.isGray(hit).build();

grayService.sendReqMsg(requestDTO);

}

logger.info("-----end getSubGraph: {} ms", requestTime);

return r;

}

Benefits of Refactoring

After two months of hard work by the team, the grayscale plan has been successfully completed, with the following benefits:

- NebulaGraph itself supports distributed expansion; the new system service supports elastic scaling, and the overall performance supports horizontal expansion;

- From the pressure test results, the performance of the interface has improved significantly, and it can support requests far beyond expectations;

- It was integrated with the company's unified monitoring and alarm system, which is more conducive to later maintenance.

Conclusion

The refactoring was successfully completed, thanks to the partners who participated in this project, as well as the support from the big data and risk control teams. At the same time, we also thank the NebulaGraph community because they quickly helped troubleshoot some of the issues we encountered.