Development Tools

Jan 19, 2021

Import data from Neo4j to NebulaGraph via Nebula Exchange: Best Practices

fengsen-neu

The requirement for real-time data updating and querying increases continuously as the data size is surging. Under such case, Neo4j is sure to fall behind. Since the single-host Neo4j Community version doesn't support scale up, the linear scalability and read/write splitting is not possible. Besides, there are data size limitation for the community version, too. To make things worse, there is the leader node writing performance bottleneck for the causal cluster provided by the Neo4j Enterprise version.

Compared with Neo4j, NebulaGraph features in single leader host writing and read/write scalability owing to its shared-nothing distributed architecture. In fact, NebulaGraph is doing a perfect job with super large data contains hundreds of billions vertices and edges.

In this article, I will show you how to import data from Neo4j to NebulaGraph with the official ETL tool, Nebula Exchange. I also cover the import related problems and solutions in this article.

Deployment Environment

Hardware configuration:

CPU name:Intel(R) Xeon(R) Silver 4114 CPU @ 2.20GHz

CPU Cores:40

Memory Size:376 GB

Disk:HDD

System:CentOS Linux release 7.4.1708 (Core)

Software information:

Neo4j: Version 3.4, five nodes causal cluster

NebulaGraph:

Version: NebulaGraph v1.1.0, compiled by the source code

Deployment: three nodes cluster deployed on one host

Exchange:nebula-java v1.1.0

Data warehouse:

hadoop-2.7.4

spark-2.3.1

NOTE: Ports allocation when deploying multiple nodes on one host: each Storage Service adds 1 to the customized port number and calls it internally.

Full and Incremental Import

Full Import

Before importing data, create schema in NebulaGraph. The schema is based on the original Neo4j data. Note that you can create schema based on your actual business need. So it's likely some properties are NULL. Confirm all the properties with your co-workers in case you leave any properties behind. The schema in NebulaGraph is similar to that in MySQL, you can create and alter properties for the schema. All the tag and edge meta data are the same.

Create tag and edge in NebulaGraph

Modify the configuration file for the Exchange

At this time, Exchange doesn't support connecting to Neo4j with

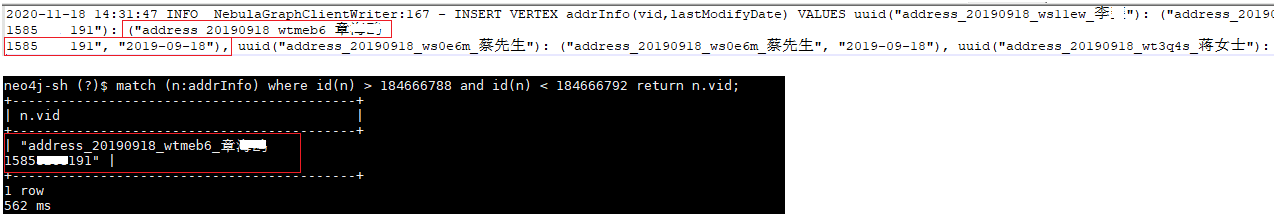

bolt+routing. If you're using causal cluster, choose a node to read data by using theboltmethod to release the cluster load.Our vid in Neo4j is stored as the string type. However, NebulaGraph v1.x doesn't support string vid, so we use uuid() to gender vids.

partition: the partition number Exchange pulls data from Neo4j.

batch: the batch size when importing data into NebulaGraph

Run the import command

Confirm the imported data

NOTE: NebulaGraph 1.x only supports count data with db_dump. In NebulaGraph 2.0, you can use the nGQL statement to count data.

Incremental Import

Incremental import is done through splitting the self-incremental id() for the Neo4j data. When importing the exec item, you need to add id() range limitation when running Neo4j Cypher statements. But before this step, stop the data deletion operation. This is because when doing incremental import, if you delete data, Neo4j uses the id() repeatedly, which will cause data loss. If you support writing data from both Neo4j and NebulaGraph, no such problem is to be worried.

Import Related Issues

Issue one: Exchange does not support the escape of special characters such as line break with enter. For example, the string data contains enter, so the insertion operation is failed.

PR:https://github.com/vesoft-inc/nebula-java/pull/203 is merged to the exchange v1.0 branch.

Issue two: Exchange doesn't supports importing NULL data type. As mentioned previously, you can add properties to some tags or edge types. Other properties that are ignored is NULL at this time. So Exchange throws an error when importing.

Modify the com.vesoft.nebula.tools.importer.processor.Processor#extraValue configuration, add converted value for the NULL type.

Optimize Import Efficiency

Improve importing efficiency by appropriately increasing the partition number and the batch value.

If your vid is the string type, use the hash() function. In NebulaGraph 2.0, string type vid is supported.

The official suggests modify the spark-submit commit command to

yarn-cluster. If you don't use yarn, configure tospark://ip:port. We use thespark-submit --master "local[16]"method to increase spark concurrency. The import rate is raised by 4+ times. In our test (three nodes on one host), the max IO reaches 200-300 MB/s. But there are hadoop cache issues when setting--master "local[16]". You can fix this by add the HDFS configurationfs.hdfs.impl.disable.cache=true.

Conclusion

Although I have encountered some problems when importing, the community has provided fast helps. Many thanks to the community and NebulaGraph staffs. Hope the openCypher compatible NebulaGraph 2.0 come soon.

References

https://github.com/vesoft-inc/nebula-java/tree/v1.0

https://docs.nebula-graph.com.cn/manual-CN/2.query-language/2.functions-and-operators/uuid/

http://arganzheng.life/hadoop-filesystem-closed-exception.html

Go From Zero to Graph in Minutes

Spin Up Your NebulaGraph Cluster Instantly!